Running fiddles and interpreting results

If your fiddle doesn't have any syntax errors, the RUN button will be enabled and allow you to execute the fiddle.

Service allocation and synchronization

The Fiddle tool does not create a dedicated Fastly service for each fiddle. Instead, the way we execute fiddles depends on whether your fiddle is a VCL or Compute one.

VCL fiddles

For VCL, there exists a pool of pre-allocated Fastly services which can be claimed temporarily by individual fiddles when they need to execute. In practice, most fiddles are created, run a couple of times, and then never touched again, so it doesn't make sense for Fastly to create a service for each one.

This is why, when you click RUN, you may see:

Provisioning a Fastly service (exec48) to run this fiddle

Equally, when you make changes to a fiddle's VCL, the service must be recompiled and uploaded to all edge servers. During this process you will see a message similar to:

Waiting for fiddle configuration to update globally (upgrading from v1 to v2 on exec48)

Fiddle uses real Fastly services that are deployed across our global fleet, so this synchronization latency is indicative of the time required to push a configuration change to a real Fastly service. Typically this takes around one minute, so if you have just made an edit seconds before you press RUN, you may need to wait some time before results appear.

Compute fiddles

For Compute fiddles, we use an instrumented instance of our open source local testing server running in a container to execute your fiddle code. This means there is no deployment delay for Compute fiddles, but the behavior you see is a simulation of the live platform, rather than using the real thing. There is a small per-request overhead to running Compute fiddles which is not experienced on live services.

Cache

Repeated executions of the same fiddle will access the same cache, even if the fiddle is edited between executions. This allows for objects processed by fiddle services to become cached within the Fastly network and be served from cache on a subsequent run.

There are several situations in which a fiddle will start again with an empty cache:

- If you refresh the Fiddle web interface

- If you hold down SHIFT while clicking RUN

- If you select Purge full cache from the Fiddle menu in the top right of the page

Understanding results

The results panel displays various types of events.

IMPORTANT: All fiddle instrumentation data is delivered asynchronously and reassembled by the Fiddle tool before being rendered in the correct order. The initial set of result data is therefore often incomplete. Allow a few seconds for all events generated by your execution session to be collected and assembled into the report.

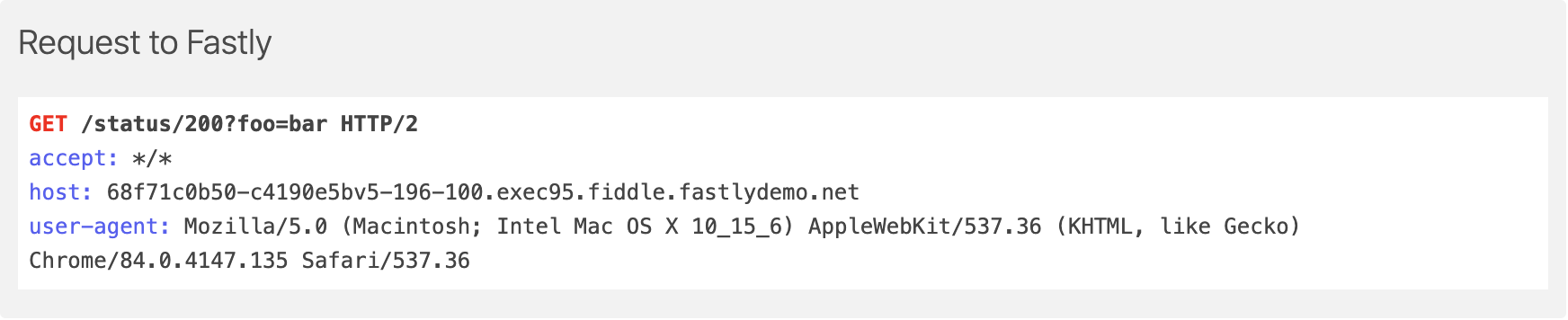

Requests and responses

The first and last blocks in the result panel (for each request) will always be the 'client' request to Fastly and the response from Fastly.

These blocks include the request and response status lines and any HTTP headers sent or received.

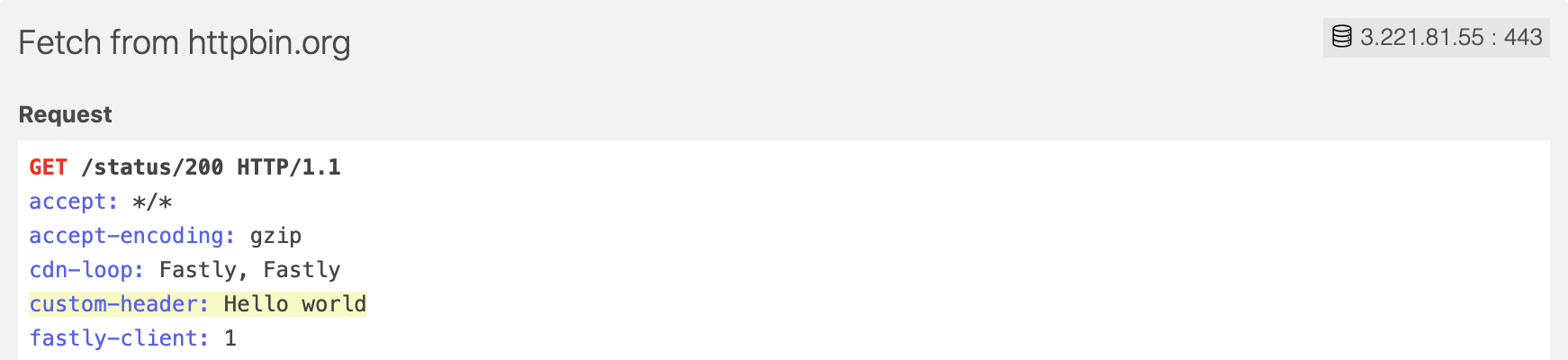

'Origin' requests, that is, requests from Fastly to origin servers, show the request and response header blocks together, along with the resolved IP address of the destination host.

HINT: In VCL fiddles, headers that you mention in your code using req.http.* syntax will be highlighted in any header blocks, since these headers are likely to be of interest to you.

VCL flow events

For each VCL subroutine (vcl_recv, vcl_hit, vcl_miss, vcl_pass, vcl_fetch, vcl_error, vcl_deliver and vcl_log) invoked during request processing, a VCL flow event will be logged by Fiddle. You will see a block describing the state of the request at the end of that subroutine and any difference from the value at the start.

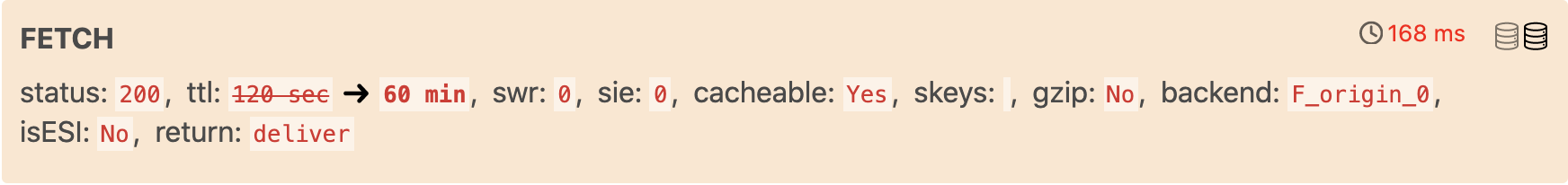

Flow events contain the following data:

- Name of subroutine: The VCL subroutine that was executed. In the example above, FETCH refers to the

vcl_fetchsubroutine. - Timing information: The elapsed time since the request was first seen by Fastly. Where this duration is based on the difference between times measured by two different clocks, there is a margin of error due to clock drift. When the duration is within that margin of error, an icon is added to the time, as shown in the example above.

- Server identity: Machine ID of the Fastly cache node that executed the subroutine. The top right of the flow event shows one or more server icons, which are ordered based on when the request reached each server. The server that processed this particular subroutine is highlighted. Hover your cursor over the server icons to see the server's node ID and POP code.

- Event data: Key-value pairs indicating the state of a selection of data relevant to that subroutine. For example, the FETCH event will report

ttl, and the HIT event will reporthits. Any data that is changed by your VCL (or Fastly's boilerplate VCL) during the execution of the subroutine shows the diff (in the example above, the TTL is changed from 2 minutes to an hour).

All the data reported in VCL flow events is accessible for use in test expressions. For example, the "status: 200" shown in the example above could be asserted using events.where(fnName=fetch)[0].status is 200.

Compute events

In Compute fiddles, events will be logged when the compute instance starts and terminates, and also for any host calls made by your code. Host calls happen when your code invokes a feature that exists outside the program's container. These include:

- Geolocation

- KV, Config and Secret stores

- Cache APIs

- Origin fetches

Compute events have a similar structure to VCL events, but do not have server-identity or timing information because Compute fiddles do not run on the Fastly platform.

Logs

Any log output generated by your fiddle code will be included in the report at the point that it was generated. In VCL fiddles, content emitted using the log statement will be captured and displayed, and will be attached to the VCL subroutine in which that log statement was executed.

In Compute fiddles, log output (whether to named log endpoints or STDIO) will be displayed as separate standalone events.

Sequential log lines that are sent to the same log destination will be combined and displayed as part of the same log event in the Fiddle UI.

Shielding

If Enable shielding is selected in request options of a VCL fiddle, and a request is about to be sent to the backend, that request will instead be transferred to the shield POP where it will go through the VCL again. This will show up in the report as a nested VCL flow sequence. For example, if a request is a cache miss on the edge and then a hit at the shield, the report may show:

RECV ➔ HASH ➔ MISS ➔ [ RECV ➔ HASH ➔ HIT ➔ DELIVER ➔ LOG ] ➔ FETCH ➔ DELIVER ➔ LOG

While this is the 'logical' sequence, in practice some subroutines are executed as background tasks asynchronously. This includes all vcl_log subroutines, and also others in cases where a request performs a background revalidation triggered by stale-while-revalidate. These background tasks are indicated with an icon. However, when shielding, background tasks triggered at the shield POP are likely to have completed before the response starts to be processed by the edge POP, so Fiddle always displays VCL flow events in state-machine order.

Shielding is not supported in Compute fiddles.

Secondary requests and sub-requests

In some cases, Fastly will execute more than one VCL flow or compute instance for a single fiddle execution. This can happen for a few different reasons:

- You can configure a fiddle to run more than one request in the fiddle web interface. These requests are sent sequentially but use the same HTTP session. The report will show NEW REQUEST between them.

- If Follow redirects is enabled, Fiddle will insert additional requests into the queue automatically when needed. These will show up similarly as NEW REQUEST. (Fiddle will only follow one redirect, so it will not follow a redirect response if it comes from a request that was itself created by a redirect)

- If your VCL code includes a

restart, processing of the current request will move back tovcl_recv, and the fiddle report will show a RESTART separator. - If your VCL code (via

h2.push) or aLink: rel=preloadheader invokes an HTTP/2 server push, the push will be processed as a new VCL flow, and listed underneath the request that triggered it, with an HTTP/2 SERVER PUSH separator. Be aware that, in fact, H2 push requests execute in parallel with the parent, but fiddle lists them sequentially to make the report clearer. The Compute platform does not support H2 push. - If the content body of the HTTP response from origin contains Edge Side Includes (ESI) tags, these are processed sequentially by Fastly after the main request processing has completed and the content is moving into the output buffer. ESI requests trigger a new VCL flow for each include, and are indicated with a EDGE SIDE INCLUDE separator. The Compute platform does not support ESI natively, but it can be implemented in Compute code or used via a library implementation such as the

esicrate for Rust.